Table of Contents

- What is web scraping?

- What Is the difference between web Scraping and web crawling?

- Requests library

- Beautifulsoup

- Scrapy

- How to set up and activate a virtual environment

- Selenium

- Conclusion

What is web scrapping?

Simply put, web scraping is the process of extracting and automating data in an efficient and fast way regardless of the quantity of the data involved, and you can also save this data in any format of your choice, such as CSV, JSON, or XML.

What Is the Difference Between Web Scraping and Web Crawling?

In web scrapping, we are only interested in getting the data from the website, e.g., product price, product description, e.t.c. Web crawling is the process of crawling different URLs on a web page in an automated way and then scraping the information from those URLs.

Python Frameworks for web scraping

1. Requests Library

Requests in Python is a module used to send all kinds of HTTP requests. HTML requests are typically made to extract data from a web page by forwarding the requests to the website server.

This basic library, however, does not parse the retrieved HTML data. You’ll have to use more advanced libraries for that, as they only retrieve the static content of a web page.

To import this library, you only need to import the library without pip installing, as shown in the code below:

import requests

To request the HTML data from a web server, we can use the following syntax.

res = requests.get("https://www.quora.com/)

To print out the HTML data.

print(res.text)

Printing it out might be a bit messy to the eye, but using the inspect tool or view page source on any browser can save you from scrolling through voluminous amounts of data.

Link to the Requests Library Docs

1. BeautifulSoup

The first positive is that it's well known for being user-friendly. This framework is simple to set up. Beautifulsoup can extract HTML and XML elements from a web page with just a few lines of code.

Beautifulsoup does have its drawbacks. However, one beautiful soup requires dependencies. We import BS4 instead of the “beautifulsoup” package. Dependencies can make It complicated to transfer code from other projects and machines.

It is important to note that beautifulsoup is a slow web scraper for large datasets, so there would be noticeable bottlenecks and slowdowns.

Another disadvantage to note here is that beautifulsoup is mainly used for HTML and XML, and parsing BS4 may not be able to handle scraping information regarding the JavaScript used on a webpage. This can be frustrating as many websites, especially e-commerce websites, now use JavaScript to dynamically load their content.

To install this library, you need to type the following command into your terminal.

pip install Beautifulsoup4

from bs4 import BeautifulSoup as bs

import requests

Using the request library and bs4, let's see how we can get the Html content from a page and print it out, by running the code below:

res = requests.get("https://en.wikipedia.org/wiki/Portal:Current_events")

soup = bs(res.content, features="lxml")

print(soup.prettify())

Link to the official Beautifulsoup documentation

3. Scrapy

Scrapy is a Python framework built for web scraping and crawling websites to extract structured data. Scrapy can also be used to extract data using APIs. It is a full-fledged web scraping framework as it does the job of both web scraping and web crawling.

Scrapy gives you the ability to store the data in structured data formats such as:

- JSON

- CSV (comma separated values)

- XML

- JSON lines

- Pickle

- Marshal

Although the learning curve can be really exponential compared to using the beautifulsoup library, with consistent practice, you will get a hang of it in no time.

What you need:

- Basic knowledge of Python (python 3.10 for this tutorial).

- IDE or a code editor

- A virtual environment

Setting Up Your System

There are several ways to install Scrapy in the Scrapy docs, but it is recommended that you install the Scrapy framework within a virtual environment.

How to set up and activate a virtual environment

To create a virtual Python environment, open your Windows command terminal and run the code below.

C:\Users\Double Arkad>py -m venv env

“env” is the name of the virtual environment. You can give it any name you want. To enter the virtual environment, input the code below

cd env

Now that you have created the virtual environment, you will need to activate it before you can use it on your project. On your windows terminal run the following code to activate Venv.

C:\Users\Double Arkad\env>scripts\activate

This will activate the virtual environment and your command prompt will look like this:

(env) C:\Users\Double Arkad\env>

(See this Link if you’re having issues with setting up your virtual environment)

#How to Install scrapy To install Scrapy, type the following command into your terminal:

Pip install scrapy

With Scrapy installed in your terminal, it's time to create your project with the command:

Scrapy startproject wikipedia

By running this command, scrapy creates a project file for you with all the modules of scrapy without you manually creating them. Note: “wikipedia” here is the name of our project.

To go into the directory of the project you just created, input the following command into your terminal:

cd wikipedia

To create the spider class, copy the URL of the web page you are about to crawl and paste it after the name of your spider (spiders are classes that define how a website will be scraped).

Scrapy genspider wikipage https://en.wikinews.org/wiki/Main_Page

“Wikipage” is the name of your spider and “en.wikinews.org/wiki/Main_Page” is the URL of the website you are about to crawl or scrape.

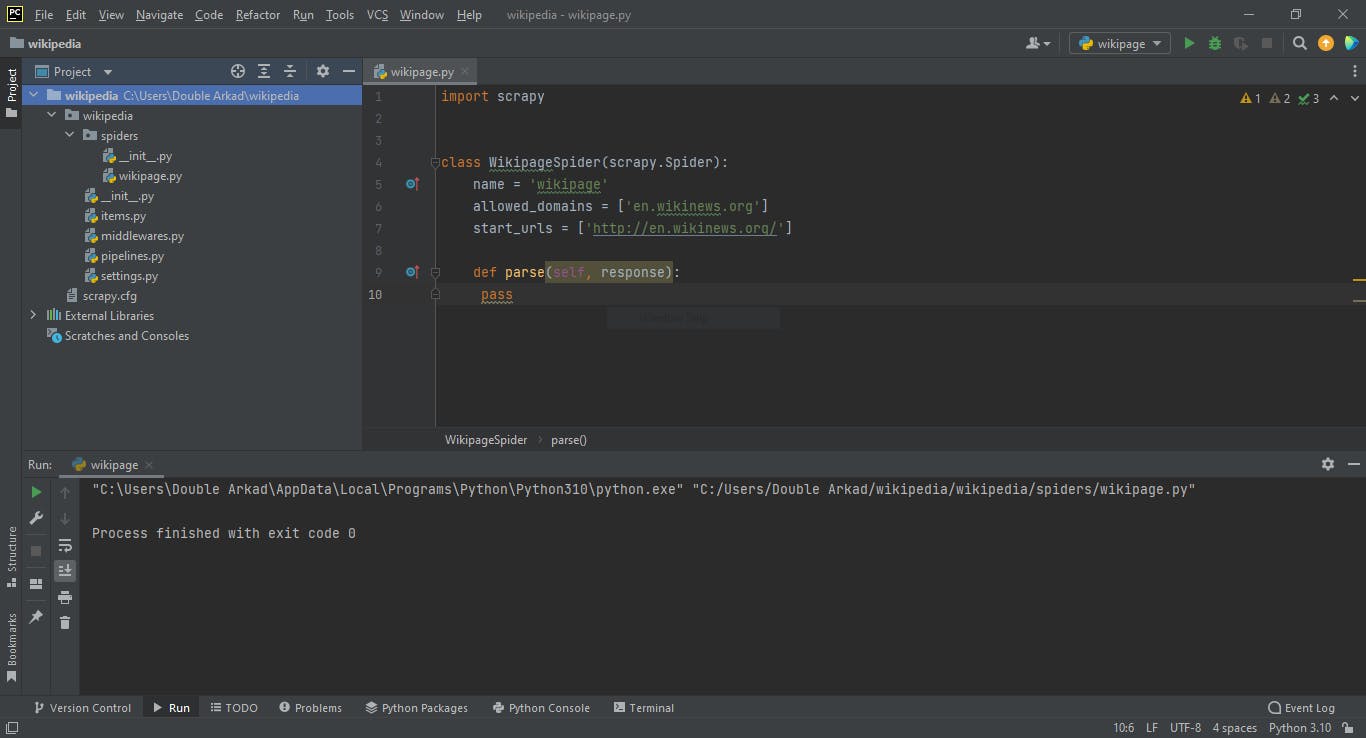

Now head on to your favorite code editor or IDE and open the project folder “Wikipedia” from the virtual environment folder created earlier.

Your project folder should open up similar to the screenshot below

4. Selenium

Selenium is a Python framework that allows us to interact with HTML elements from any website, so, rather than just scraping information, which we can do with Selenium, we can grab information from web pages.

We also interact with it, so we could, in theory, make things like BOTS that we can have. You know, like an automated script that goes through and tests different aspects of our website. It's actually quite useful, and it's pretty easy to get set up.

What do you need?

- Basic knowledge of Python (python 3.10 for this tutorial).

- IDE or a code editor

- A virtual environment

In your terminal, pip install selenium by running the code:

Pip install selenium

Now you have installed selenium, you need to import Selenium before you can use it in your project. You can do this by inputting the code below:

Import selenium

If you do not get an error, then you’re good to go. Otherwise, make sure your pip installation of selenium was successful in your terminal.

Note that to be able to use the webdriver and chromedriver you will need to run the following code to import them from Selenium:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

options = Options()

options.add_argument("start-maximized")

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()), options=options)

driver.get("https://en.wikinews.org/wiki/Main_Page")

Let's begin interacting with the website we specified above by printing out the title of the webpage:

print (driver.title)

Link to the official selenium docs to find out more.

Conclusion

If you’re looking to start a career in data science or machine learning, you’re already aware of how vital getting data from disparate sources is. For processing these large amounts of data, web scraping is indispensable for gaining fresh insights and deriving new techniques from the information available on the web.

Whether it’s lead generation, competitor monitoring, pricing optimization, product optimization, or social media monitoring, web scraping can help get, process, and store large amounts of data with ease.