Table of Contents

- Why BigQuery?

- How to extract tables from MySQL using mysqldump

- Using MySQL Workbench to export data as CSV

- Creating a new project on BigQuery

- How to load a CSV file into BigQuery

Why BigQuery?

Storing and querying massive data sets can be time-consuming and expensive without the right hardware and infrastructure. BigQuery is an enterprise data warehouse that solves this problem by enabling super-fast SQL queries using the processing power of Google's infrastructure.With the vast volume of data produced by IoT devices, clickstreams, and other sources increasing daily, analysis can no longer be carried out instantly. Thus, analytical solutions like BigQuery are essential and should be considered for data migration and querying.

In this article, you’ll be introduced to BigQuery and its key features, and how to migrate data from MySql to BigQuery.

Prerequisites:

- A MySQL account

- A BigQuery account

- Knowledge of SQL and its syntax

- In-depth knowledge databases and data warehouse

Introduction to BigQuery

BigQuery is a fully managed serverless data warehouse that enables scalable analysis over petabytes of data. It is a platform as a service that supports querying using ANSI SQL.

It also has built-in machine learning capabilities, so you can build and operationalize machine learning solutions with simple SQL. You can easily and securely share insights within your organization and beyond as data sets, queries, spreadsheets, and reports. BigQuery allows organizations to capture and analyze data in real-time using its powerful streaming injection capability, so that your insights are always current, and it's free for up to one terabyte of data analyzed each month and 10 GB of data stored.

Bigquery transparently and automatically provides durable replicated storage and high availability with no extra charge and no additional setup.

- Data encryption and security: you have full control over who has access to the data stored in BigQuery. BigQuery makes it easy to maintain strong security with fine-grain identity and access management.

Cloud identity and access management and your data are always encrypted at rest and in transit, BigQuery supports a standard SQL dialect which is ANSI 2011 compliant, reducing the need for code rewrites and allowing you to take advantage of advanced SQL features. BigQuery provides free ODBC and JDBC drivers to ensure your current application can interact with BigQuery's engine.

Unique data warehouse services: BigQuery was designed as a cloud-native data warehouse. It was built to address the needs of data-driven organizations in a cloud-first world. BigQuery is Google Cloud Platform's serverless, highly scalable, and cost-effective cloud data warehouse. It provides both batch and streaming modes to load data. It also allows importing data directly from certain software as a service (Saas) applications using the BigQuery data transfer service.

Ease of implementation: So, building your own is expensive, time-consuming, and difficult to scale, but with BigQuery, you get to load data first and pay only for what you use (pay as you go).

Speed: it processes billions of rows in seconds and handles the real-time analysis of streaming data.

How to extract data from MySQL using mysqldump

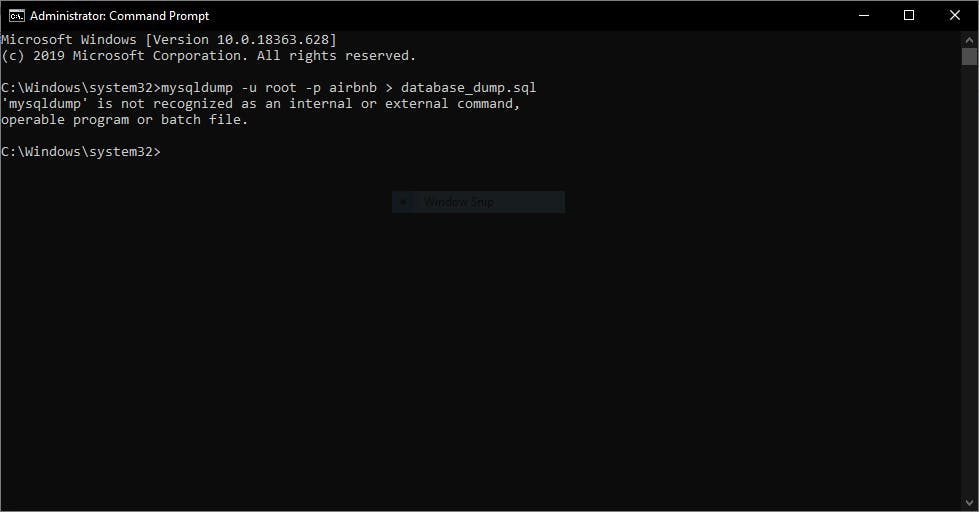

mysqldump is a client utility tool that comes together with the MySQL installation. It is used to create a logical backup of a database or table in MySQL. To create a backup of a database, enter the code below in your terminal window and open the command prompt as an administrator before running the code.

mysqldump -u root -p airbnb > database_dump.sql

“airbnb” is the name of the database you want to create a backup or dump file for, while “database_dump.sql” is the name of the new dump file you’re about to create, with the file extension .sql attached to it.

If you encounter the error below,

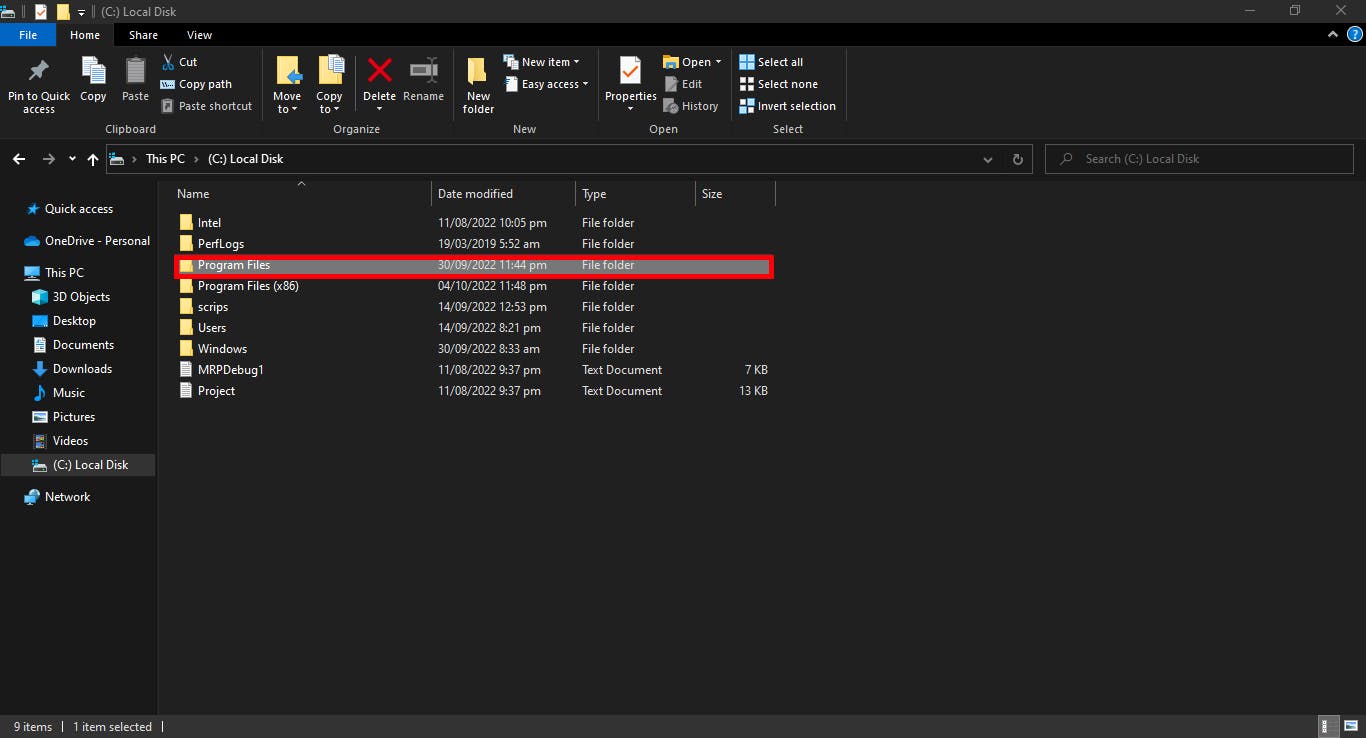

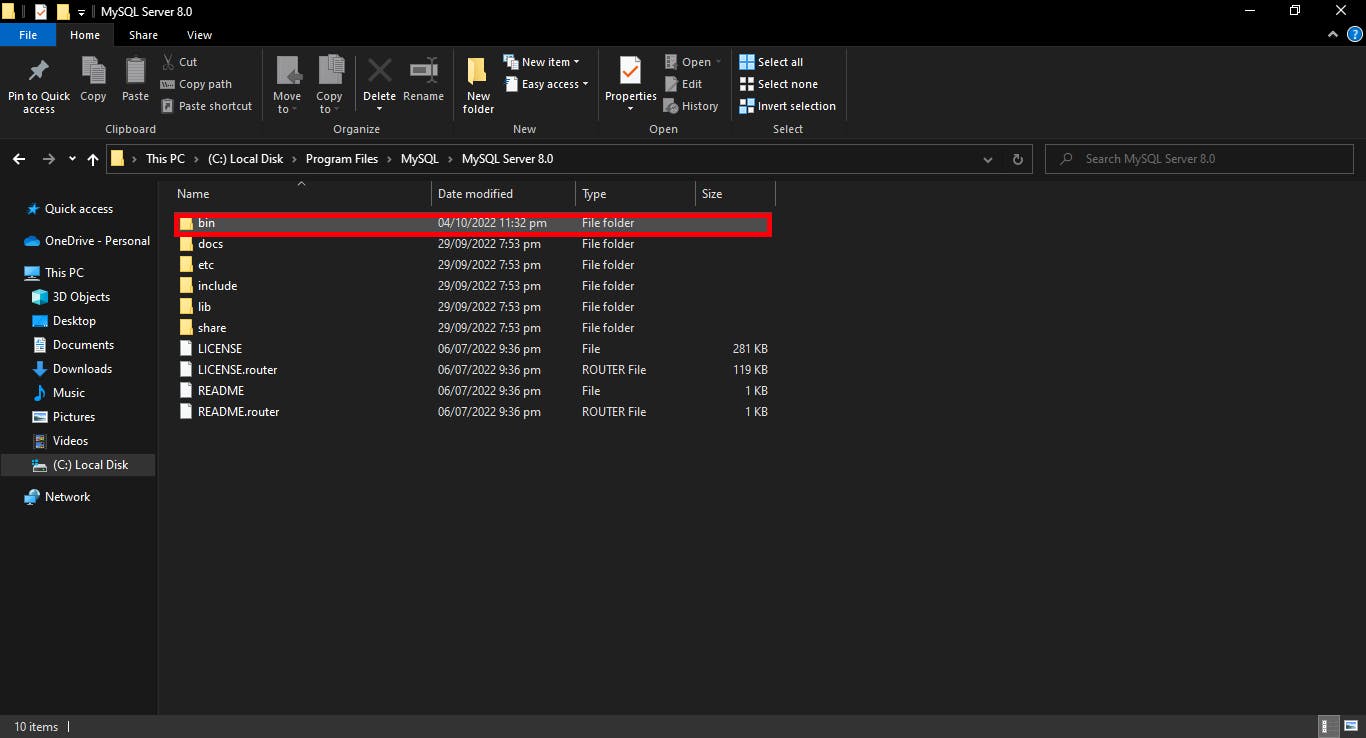

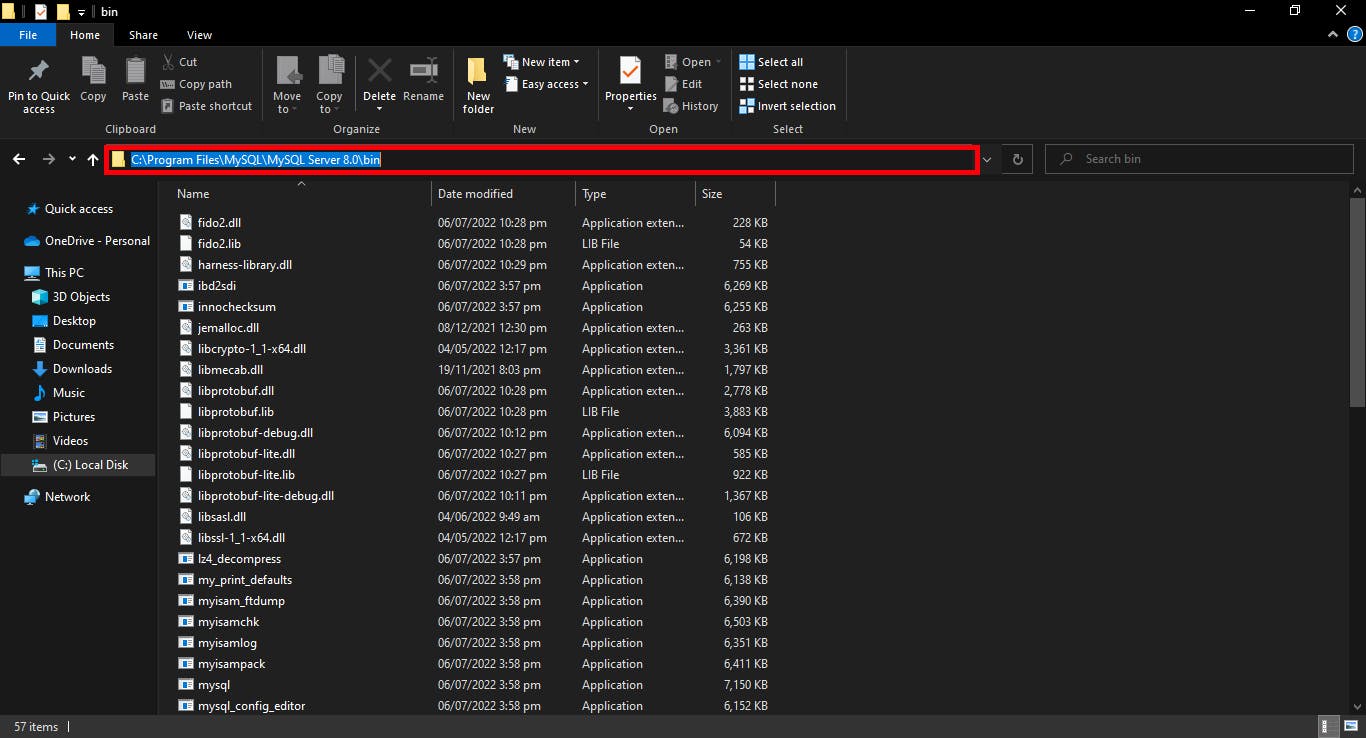

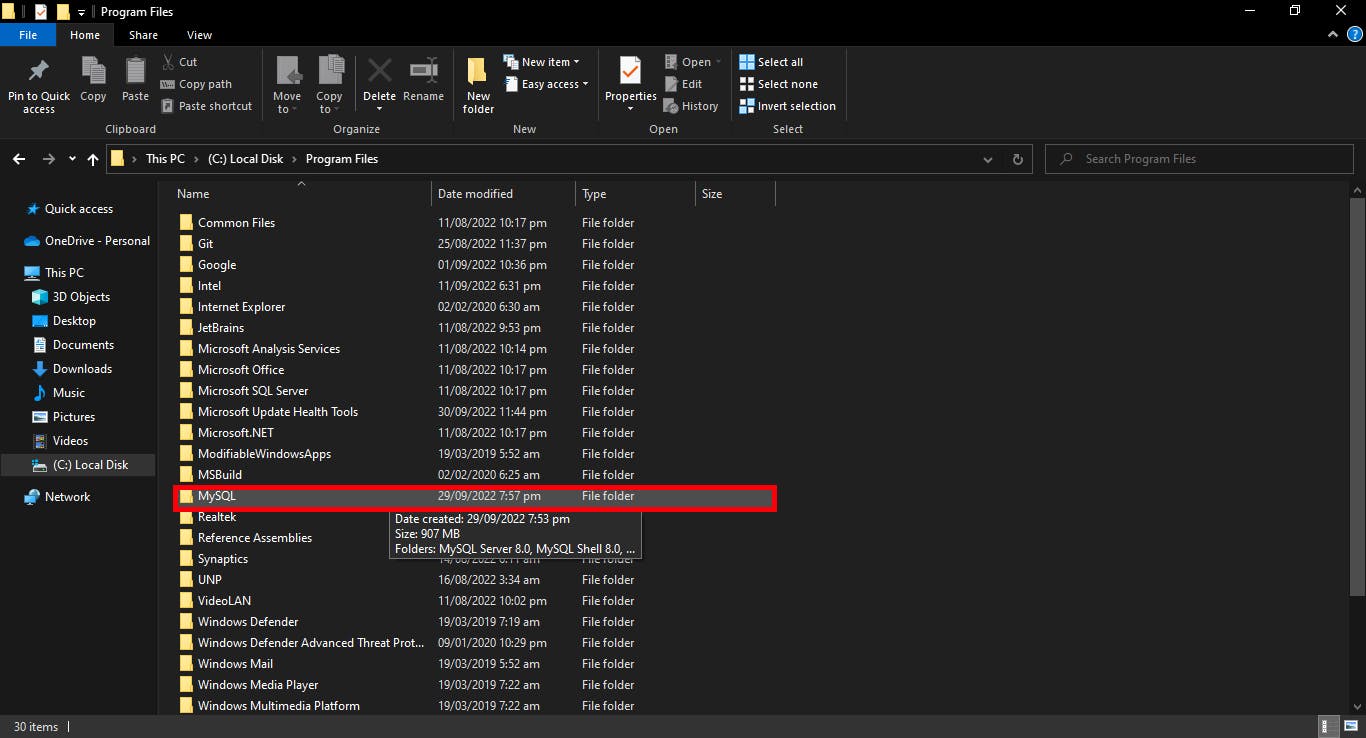

Copy the file location of the mysqldump in the bin folder and run the code below on your terminal:

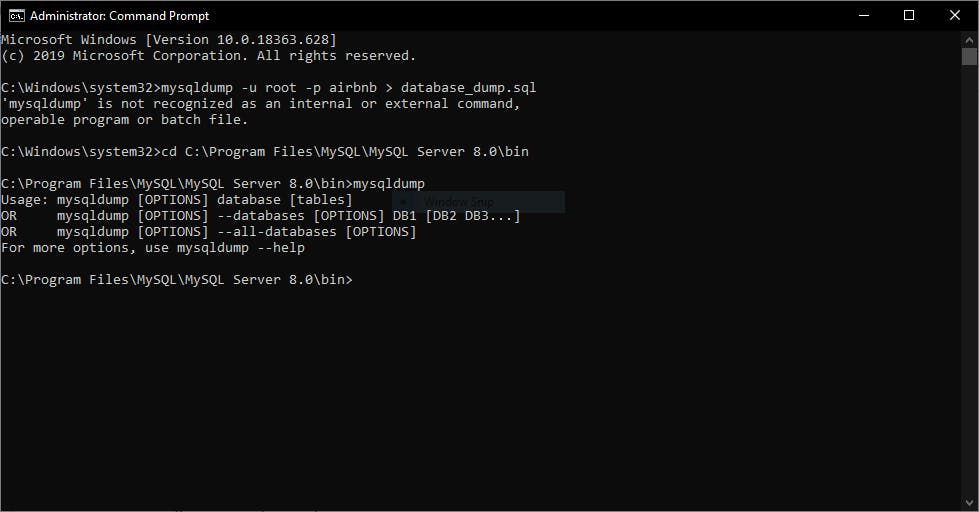

cd C:\Program Files\MySQL\MySQL Server 8.0\bin

Type in

mysqldump

and hit enter.

Your result should look like this:

mysqldump -u root -p airbnb > database_dump.sql

The backup file or dump file of your database will be created, and you can locate the file by going to the bin folder.

The .sql output has to be converted to a recognizable file upload format in BigQuery (CSV, JSONL - Newline delimited JSON, Avro, Parquet, or ORC). To convert a .sql file to CSV, a python script will be required, use the following python script to convert the file to a CSV format

Using MySQL Workbench to Export Data as CSV

Method 1:

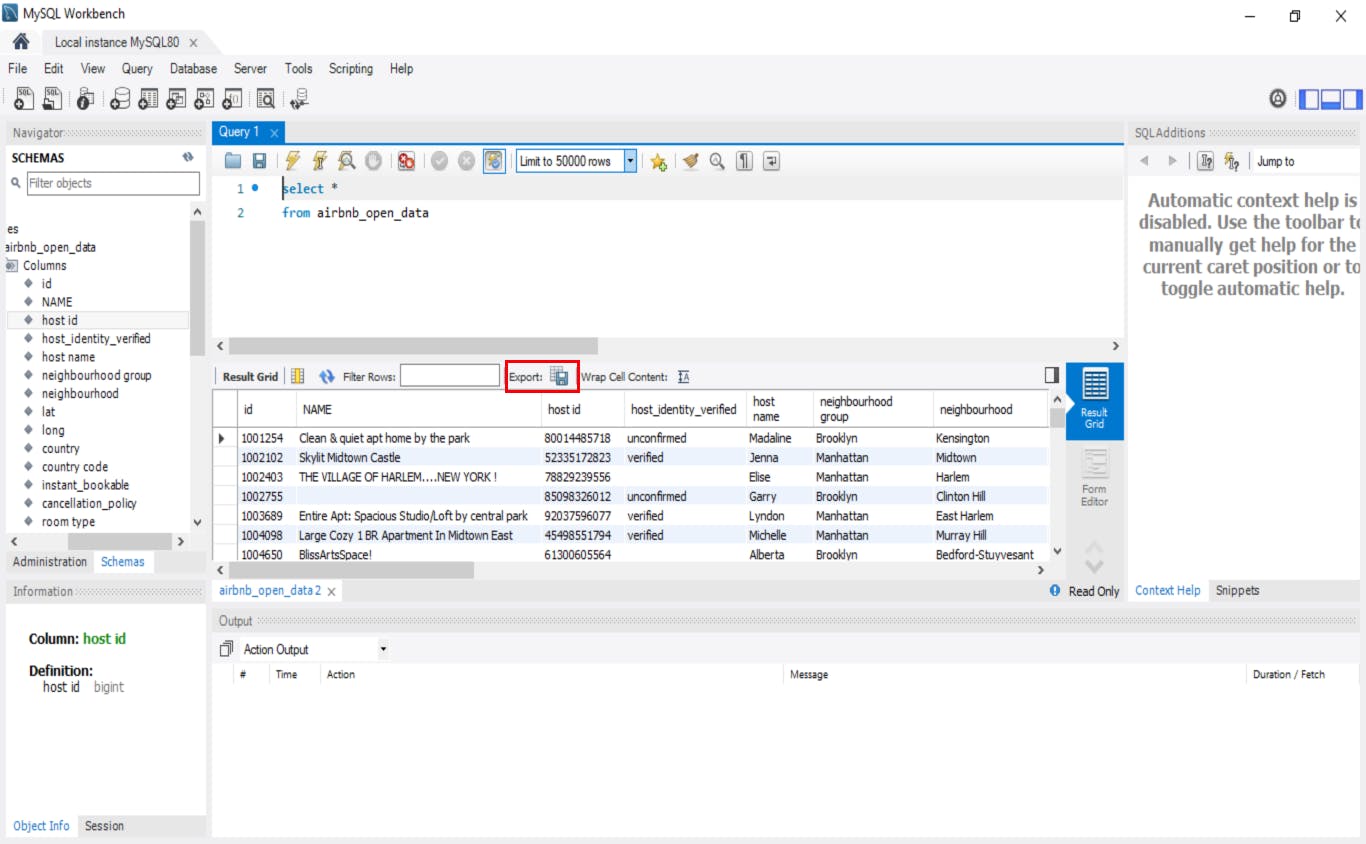

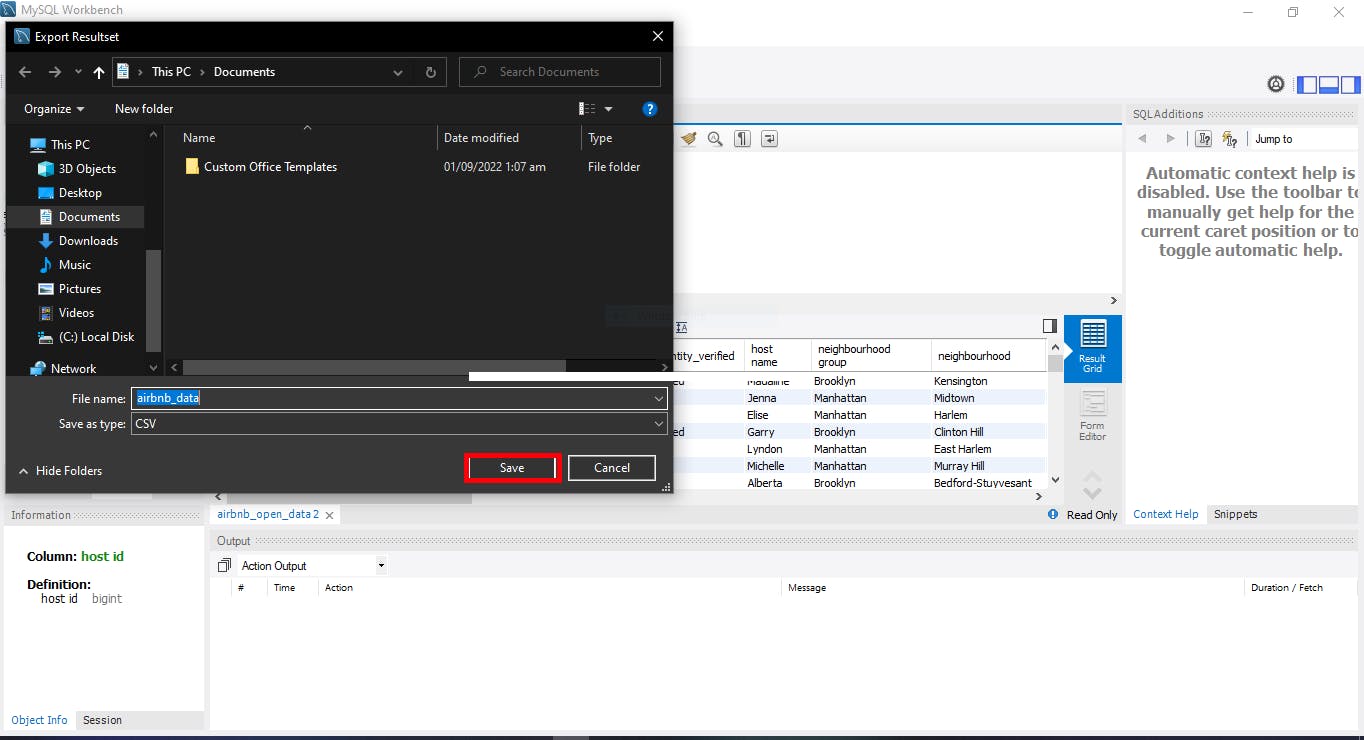

Select * from [table]. From the result grid, click on “Export” as seen below:

Input the code below into the MySQL workbench.

SELECT *

FROM airbnb_open_data

INTO OUTFILE 'C:/Program Files (x86)/updated_airbnb.csv'

FIELDS ENCLOSED BY ''

TERMINATED BY ','

ESCAPED BY '"'

LINES TERMINATED BY '\r\n';

A few things to note when migrating your data from MySQL to BigQuery:

- BigQuery does not enforce primary and unique keys.

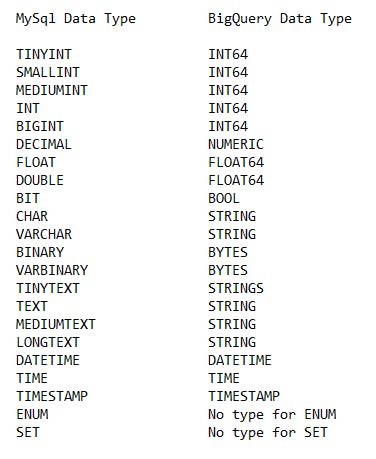

- Data types are slightly different between the two; some are convertible while others are equivalent, as seen below:

Creating a new project on BigQuery

Prerequisites:

- A Google BigQuery account or a BigQuery sandox account.

- Knowledge of databases and data warehouses

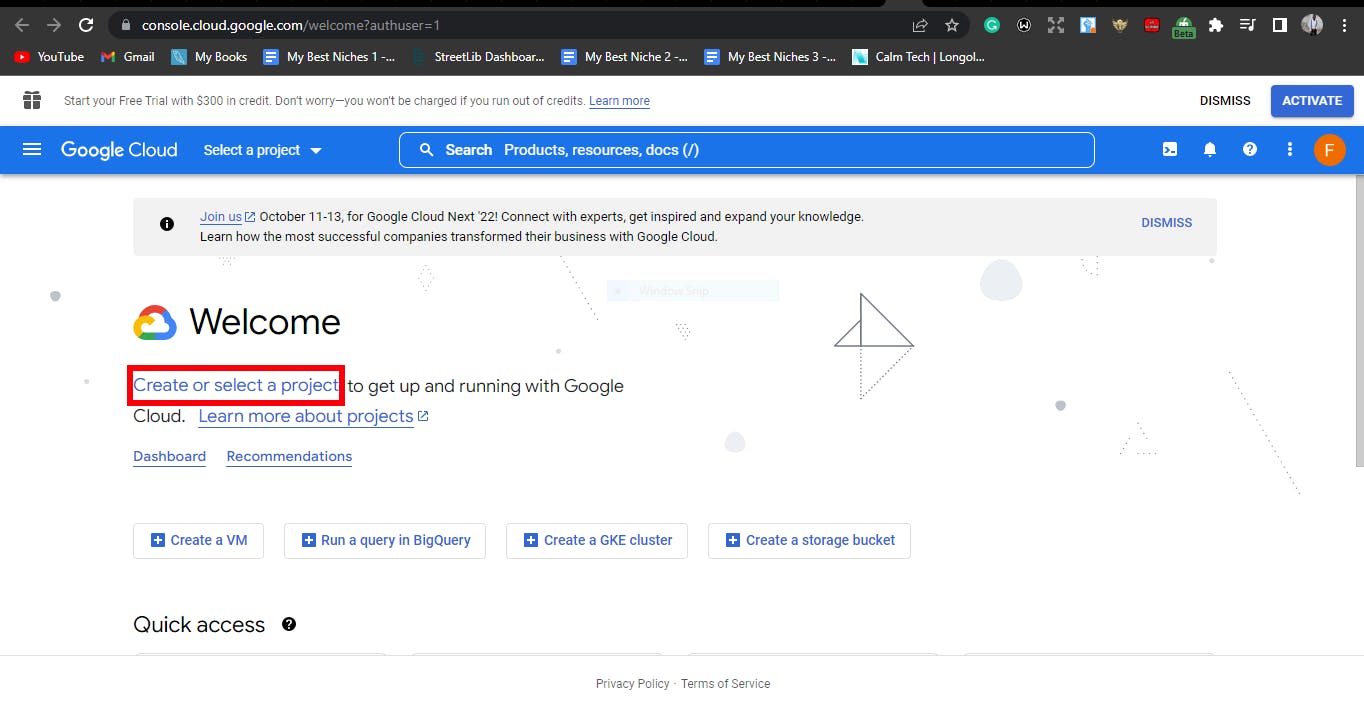

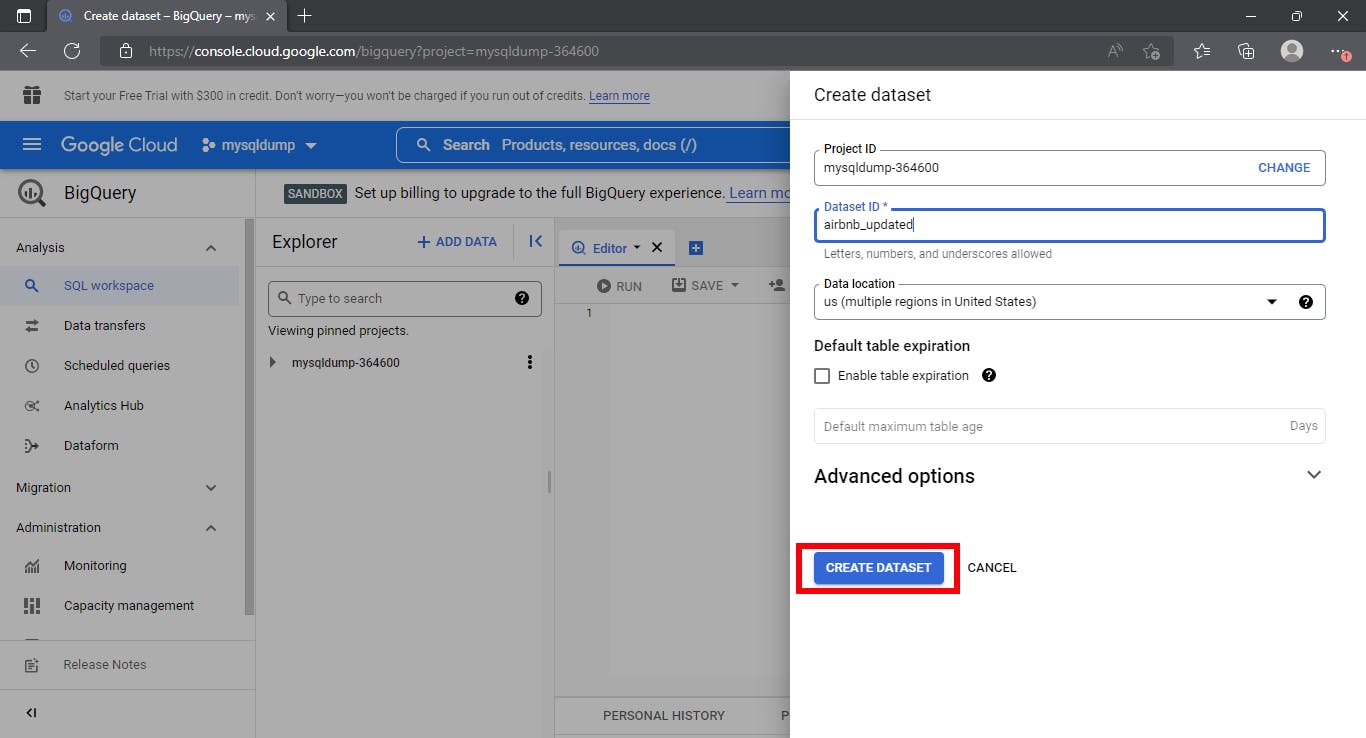

I will be using a sandbox account for the purpose of this tutorial. After exporting the table as CSV, log in to your BigQuery account, following the link console.cloud.google.com

Create new project

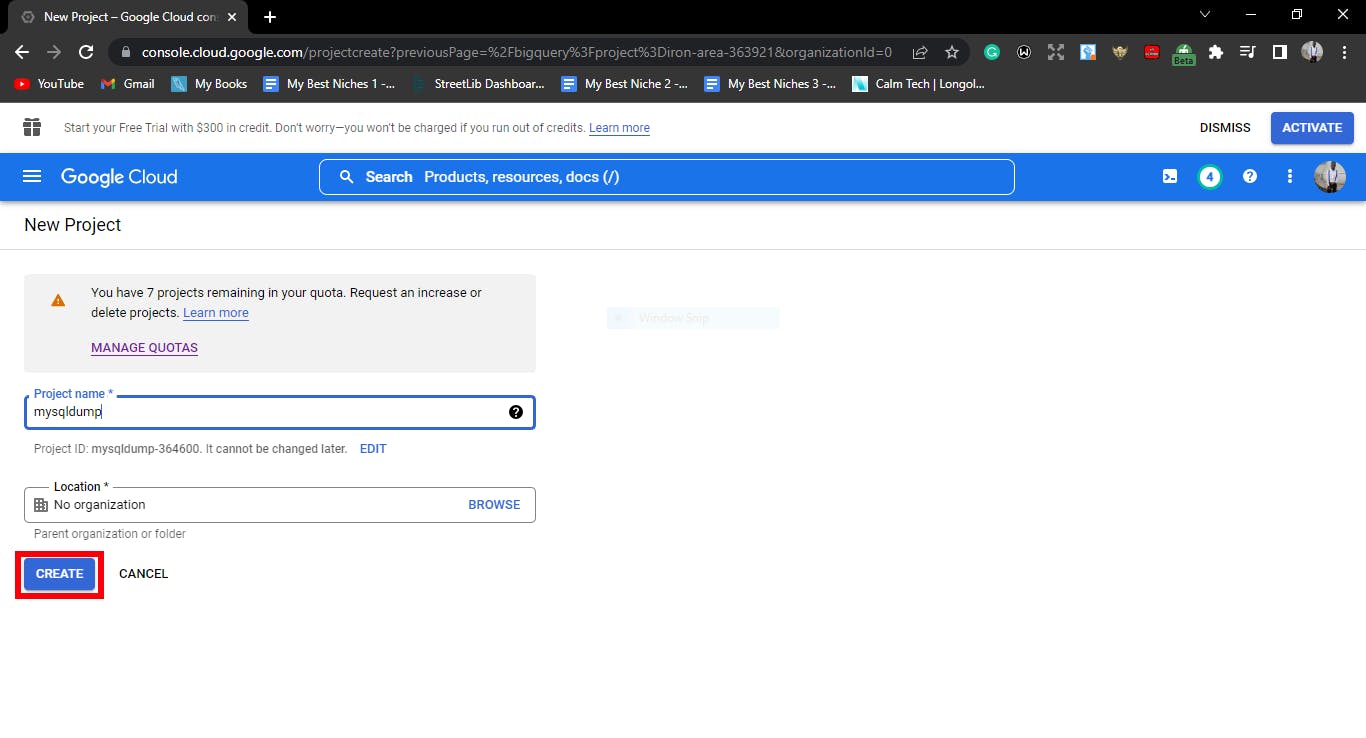

Type in the name of the project and click on Create.

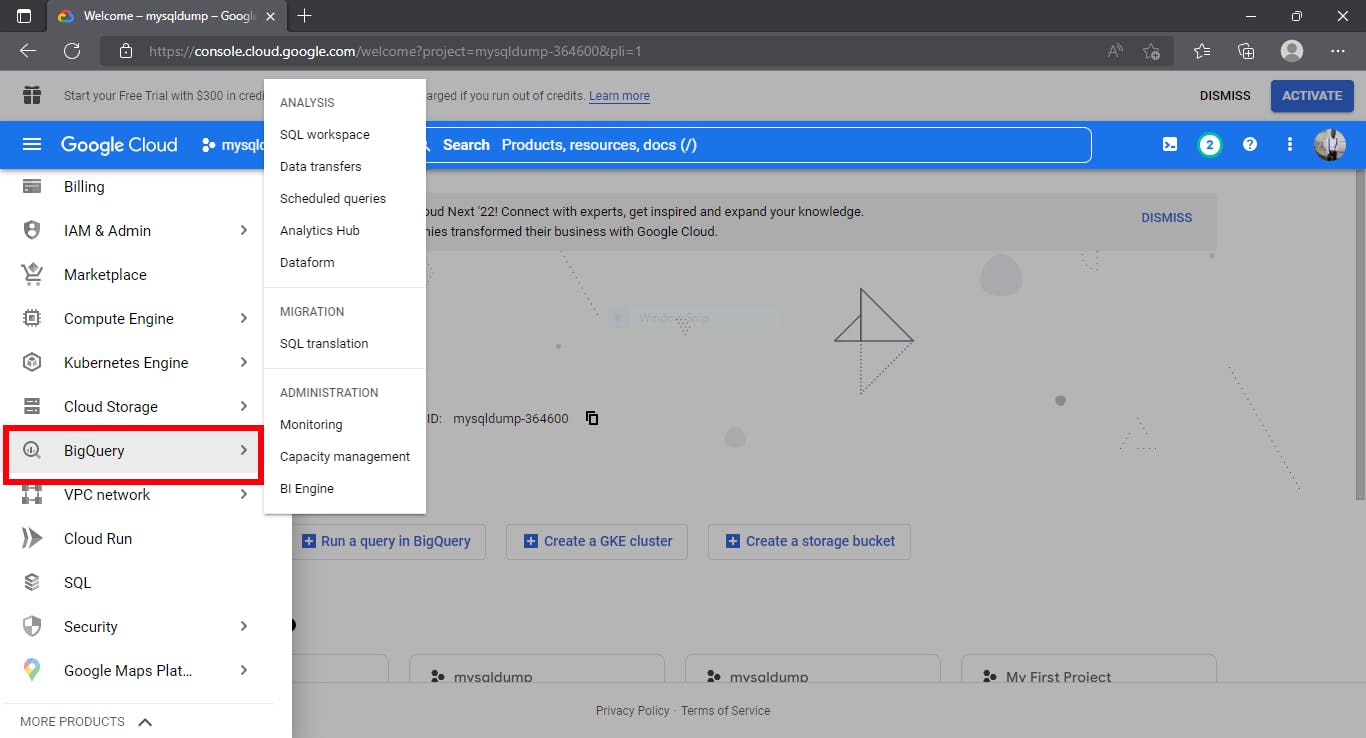

After creating a project, search for BigQuery on the left panel.

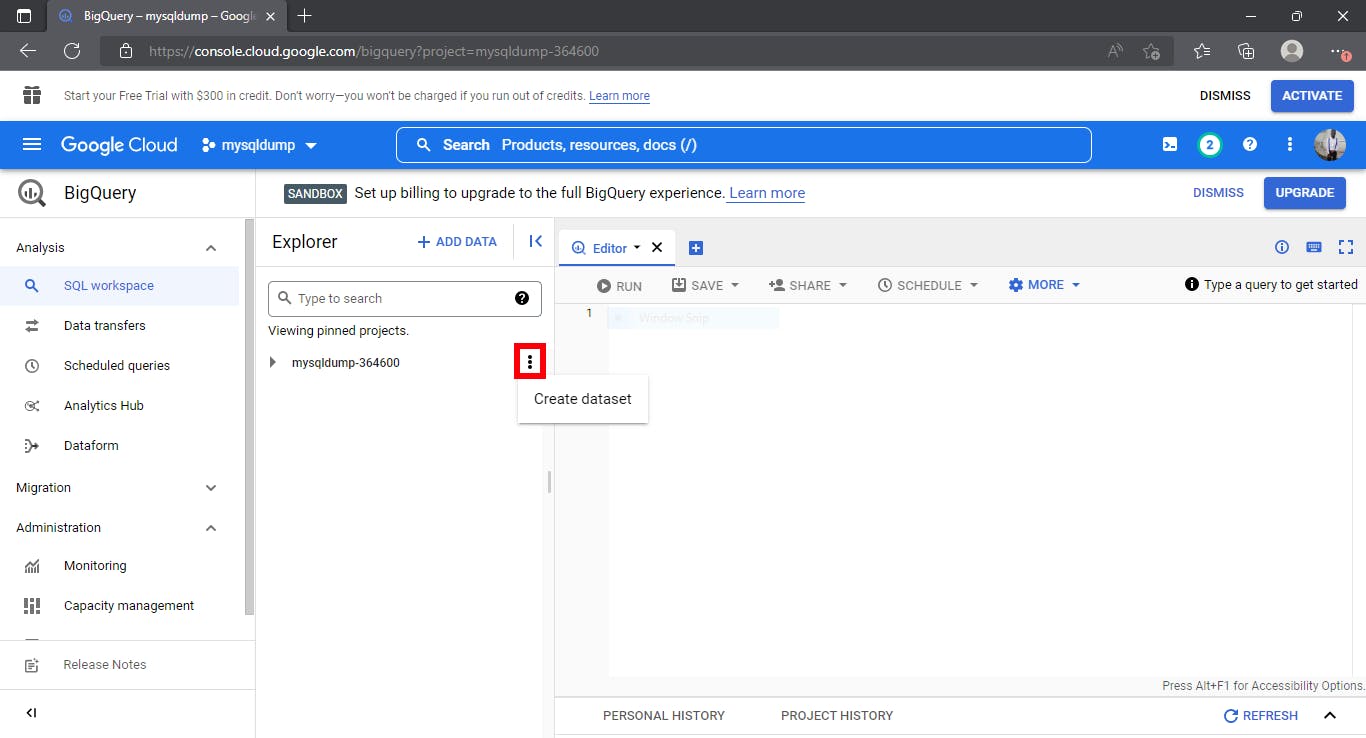

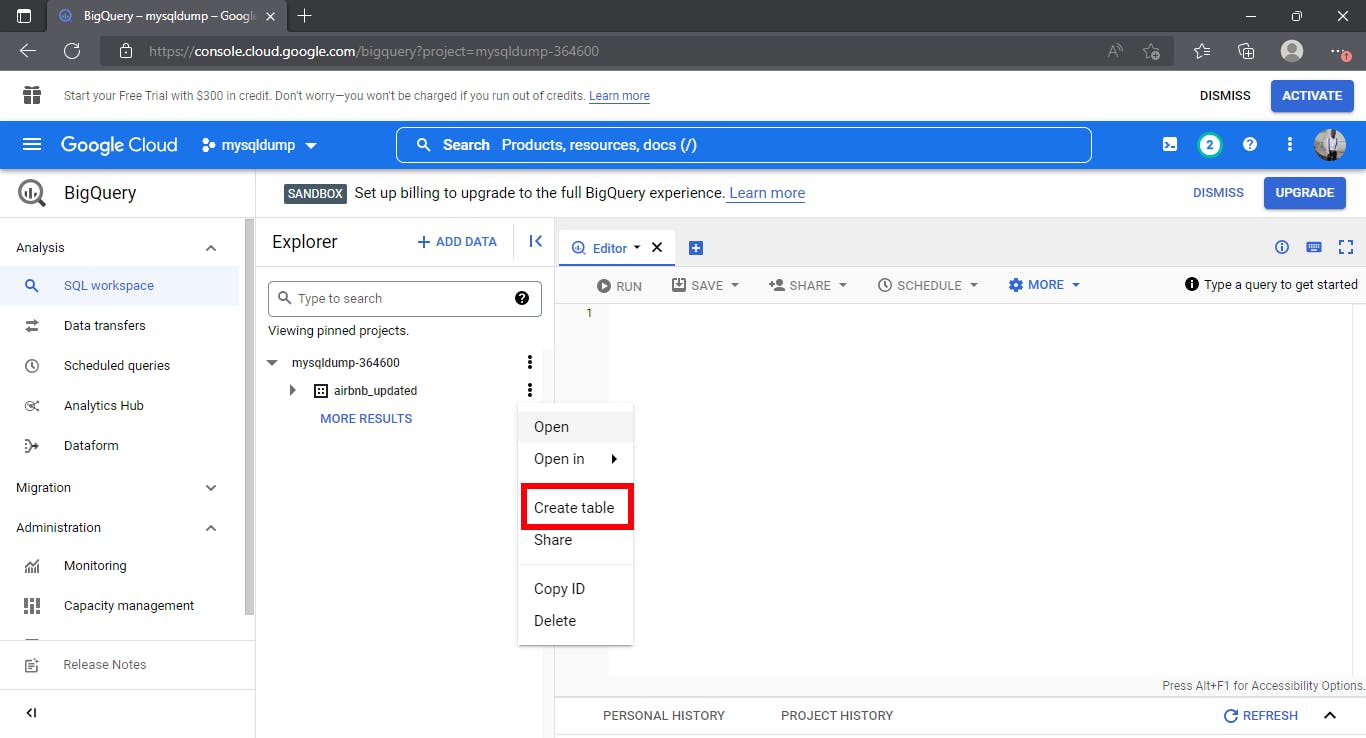

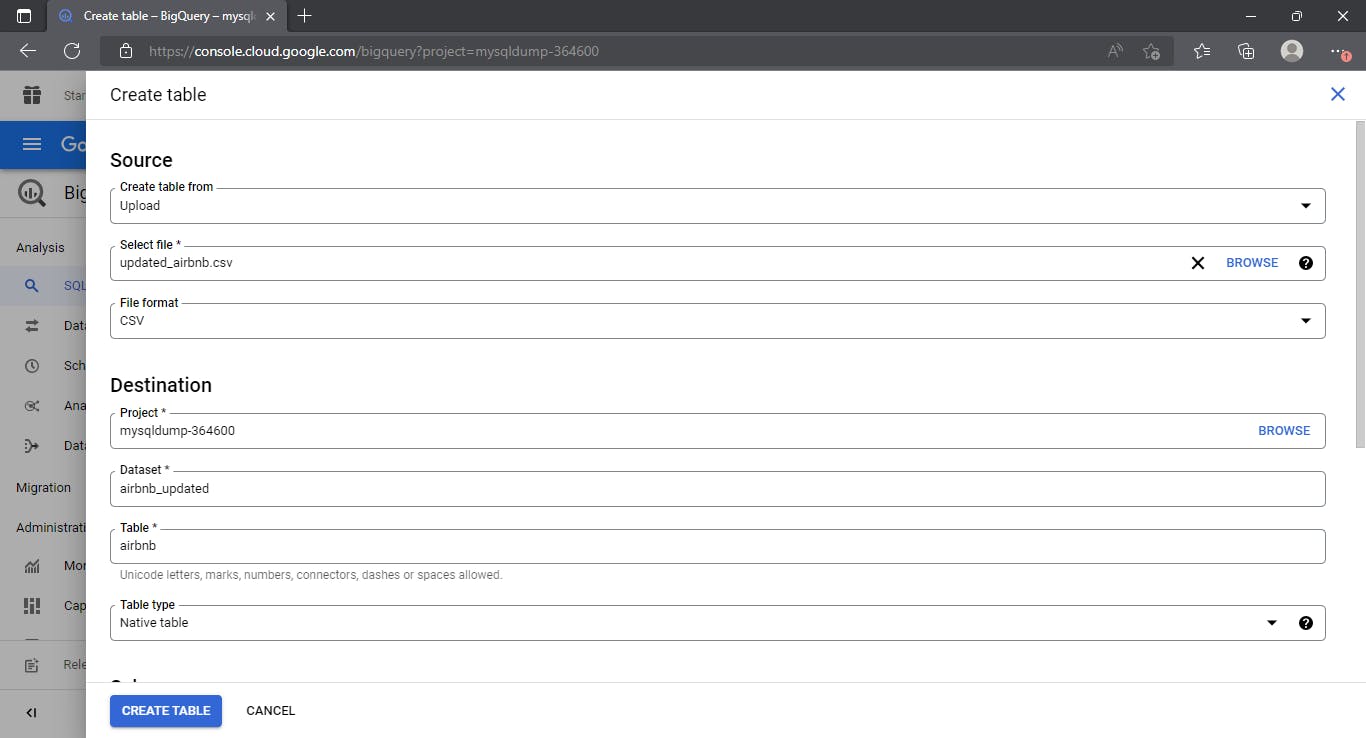

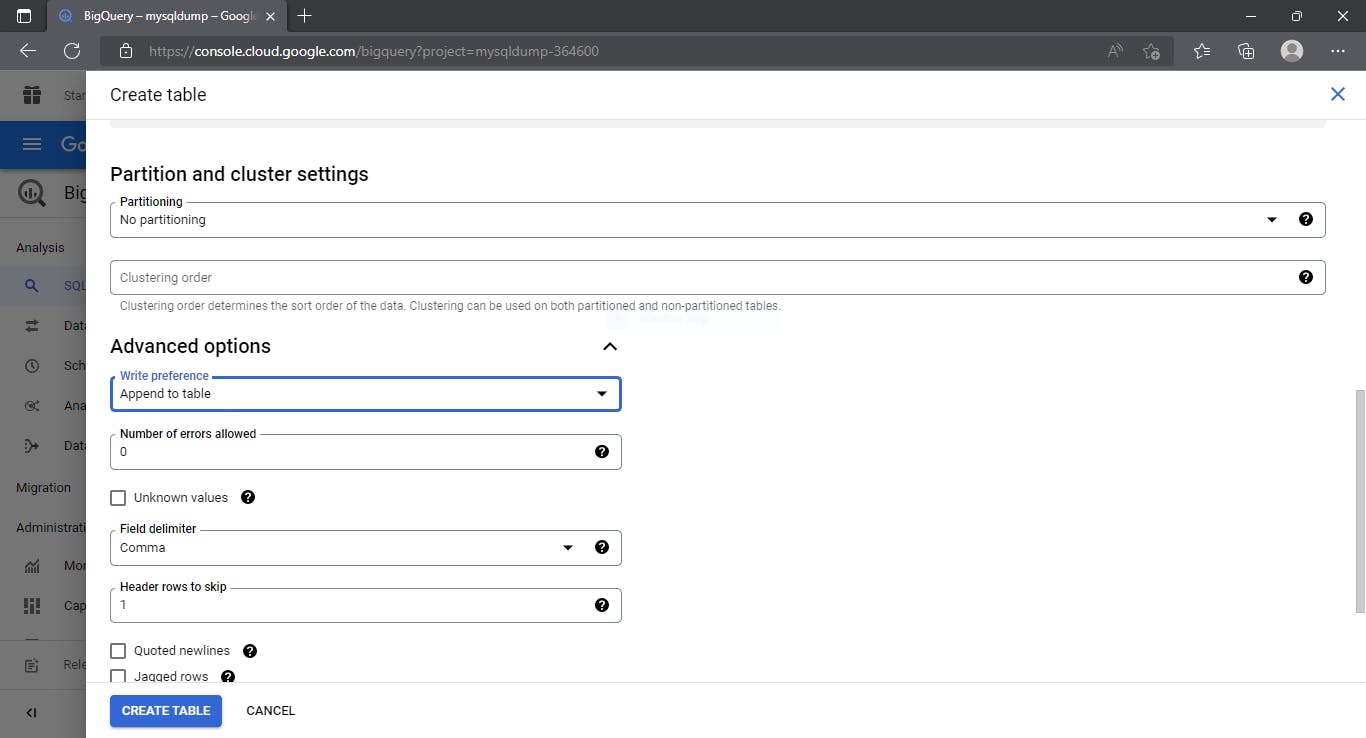

How to load a CSV file into BigQuery

First, you need to create a dataset.

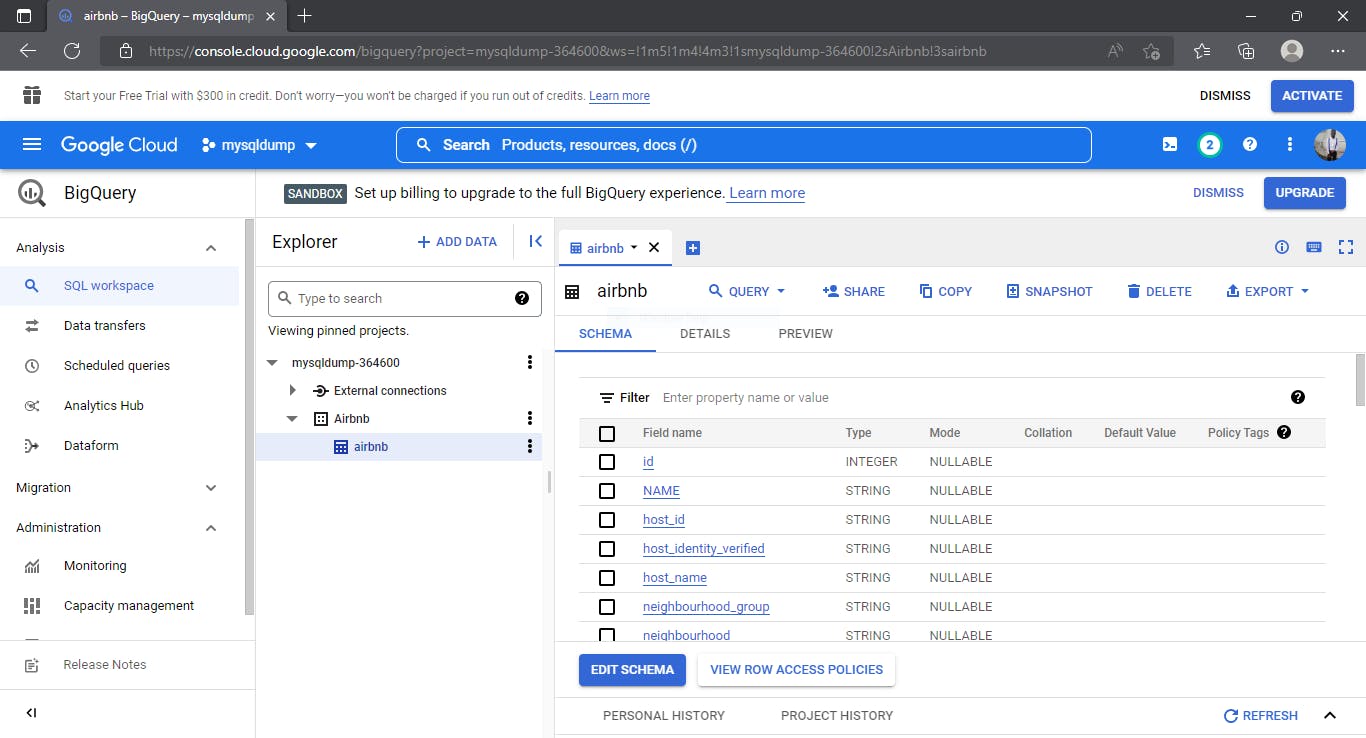

Depending on the volume of data being uploaded, it might take a while to upload. We get the result below .

We can start querying and performing real-time analysis on the data using BigQuery's high availability, speed, and storage available at your fingertips.

You can also check out the BigQuery documentation here